“We haven’t really cracked the nut to get people to talk to one another.” That’s Nathan Miller, the former Case consultant who is the founder of Proving Ground, a startup that specializes in helping businesses leverage data to inform their building projects.

Miller made this observation during Thornton Tomasetti’s third annual AEC Technology Symposium and Hackathon, where he and other speakers attempted to address the question of whether technology tools are helping or hindering communication and production among AEC firms and their clients.

While they didn’t provide definitive answers, the speakers took turns describing the necessity and value of creating products that facilitate the exchange and understanding of data-driven design by all levels of users.

A “pleasant interface” is what any tool should strive for, said Andrew Heumann, an Associate and Design Computation Leader with NBBJ, who discussed how computational tools are evolving and engaging with design teams. One example he pointed to is the Human UI (for utility interface) plug-in that makes the widely used Grasshopper algorithmic modeling software more of a customized app and “less intimidating” to designers.

While some of the presentations were thinly veiled product pitches, they didn’t detract from the symposium’s overriding message that technology tools are useful primarily when they smooth a path to a satisfying end result.

‘The future is a lot flatter than before. Open source software gives the freedom to use, study, modify, and share information.’

—Gareth Price, Ready Set Rocket

“Stop designing around the tool and start designing to the interface,” said Owen Derby, Technical Product Manager and Software Engineer with Flux, whose platform provides cloud-based collaboration tools to exchange data and streamline complex design workflows.

The symposium, held on September 25 at Baruch College in New York, touched on how different tools can be used to collect, analyze, and disseminate information for the purposes of design and construction.

Here’s a recap of some of the hot topics discussed at the event:

Sensors monitor a building’s heartbeat.

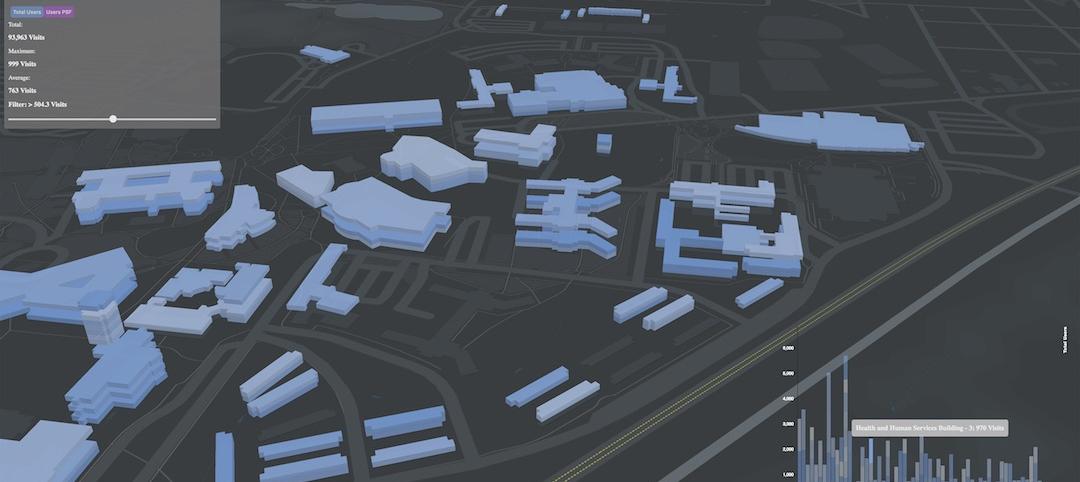

Constantine Kontokosta, PE, AICP, RICS, Deputy Director, Academics, of the NYU Center for Urban Science + Progress, quoted urban activist Jane Jacobs—about cities being laboratories—as a starting point to discuss how sensing technologies are helping researchers, urban planners, developers, and AEC teams understand the pulse of cities in order to predict future changes.

Data, he explained, can and should be teased out of just about anything: smartphones, social media, lighting patterns and plume rates, carbon and steam emissions, even taxi rides. CUSP has been working with New York City on a test project called Quantified Communities in two New York City neighborhoods, Hudson Yards and Lower Manhattan. It uses expanded sensor networks to collect and analyze real-time data to determine how neighborhoods are performing as a means toward urban planning that incorporates how people live, work, and play.

KieranTimberlake, a research-centered design practice, recently converted a historic bottling plant in Philadelphia for its new architectural studio. Christopher Connock, a Researcher and Prototyper at the firm, said the project team determined where the MEP system would be taxed the most by measuring the temperature versus humidity across the building using 124 interior surface sensors, 56 relative temperature/humidity sensors, 60 ceiling sensors, and 120 surface and core structure slab sensors. The project ended up dispensing with a conventional HVAC system in favor of an exhausted cooling system.

Pictured (l. to r.): Josh Wentz, Technical Product Manager of BuildingOS, Lucid; Constantine Kontokosta, PE, AICP, RICS, Deputy Director, Academics, of the NYU Center for Urban Science + Progress; Owen Derby, Technical Product Manager, Software Engineer with Flux Factory; Nathan Miller, Founder, Proving Ground; and Robert Otani, PE, LEED AP BD+C, Principal with Thornton Tomasetti.

Pictured (l. to r.): Josh Wentz, Technical Product Manager of BuildingOS, Lucid; Constantine Kontokosta, PE, AICP, RICS, Deputy Director, Academics, of the NYU Center for Urban Science + Progress; Owen Derby, Technical Product Manager, Software Engineer with Flux Factory; Nathan Miller, Founder, Proving Ground; and Robert Otani, PE, LEED AP BD+C, Principal with Thornton Tomasetti.

But sensors still aren’t universally applied as tools for data collection and analysis. Josh Wentz, Technical Product Manager of BuildingOS with Lucid, lamented how the vast majority of the five million commercial buildings in the U.S. still lacks automated technologies. And what’s out there is usually fragmented, often proprietary, and disconnected from other buildings.

With the emergence of the Internet of Things (IoT), there now are “great opportunities,” Wentz said, to standardize the language of buildings and their occupants’ activities in order to quantify the value of different post-construction performance metrics. Lucid currently has 10,000 buildings and 50,000 devices from which it is aggregating and synthesizing data via its BuildingOS cloud-based interface. Lucid plans to launch an application program interface (API) for this product next year.

Open source collaboration moves the needle.

In September, Thornton Tomasetti’s CORE Studio released Spectacles, an HTML5 BIM Web viewer designed to be hacked, extended, and modified. This platform is one of several that CORE Studio has developed as open source projects.

“The future is a lot flatter than before,” observed Gareth Price, Technical Director for Ready Set Rocket, a digital marketing agency. “Open source software gives the freedom to use, study, modify, and share information.”

During the symposium, a number of speakers touched on the advantages of open source for producing the best tools. Matt Jezyk, Senior Product Line Manager, AEC Conceptual Design Products, with Autodesk, explained how his company’s team developed its Dynamo suite as a side project in collaboration with architects, engineers, and programmers. “It came out of the needs of the AEC community,” said Jezyk.

He said Autodesk is now building more of its tools so they can interface with other tools. For example, its VRX (virtual reality exchange) platform “has become a collaborative tool” through which users can share BIM models. He added that Dynamo and other Autodesk products are freely accessible on GitHub.com.

Other speakers, though, lamented that open source is still more of an ideal than a reality for AEC firms and their clients, who view data as proprietary and a competitive advantage.

Challenging assumptions with better data.

“Failure is the new R&D,” Price exclaimed, to make a point about the value of experimentation in pushing the industry forward. Stephen Van Dyck, a Partner at LMN Architects, and Scott Crawford, a Design Technologist at the firm, confirmed that notion as they discussed the evolving role of R&D in their firm’s recent public works projects.

LMN had three months to deliver the design for the Global Center for Health Innovation, Cleveland. So it employed a plug-in that allowed for solutions in fabrication and a design study that ultimately became the building. In the process, LMN was able to deliver a façade system on time at $65/sf.

Luc Wilson runs the X-Information Modeling think tank at Kohn Pedersen Fox, which focuses on using urban data and digital analysis tools. During the symposium, Wilson spoke about the efficacy of creating diagnostic tools that are capable of conducting an “urban MRI” by integrating a project’s competing objectives, visualizing data, and coming up with multiple options.

Ana Garcia Puyol, Computational Designer with Thornton Tomasetti, speaks to the crowd.

Ana Garcia Puyol, Computational Designer with Thornton Tomasetti, speaks to the crowd.

In one case study Wilson presented, One Vanderbilt in New York, the city cared most about preserving pedestrian space and street daylighting, whereas the developer was primarily interested in optimizing the value of the surface area. To reconcile those objectives, KPF tested a variety of designs for a better rate of performance within the design scheme. It also engaged city planners on zoning to test their assumptions. The same was true of its Lower Residential Block project, part of a master plan at London’s Covent Garden.

“We needed to show why the historic block typology wouldn’t work,” said Wilson. The way KPF did this was by comparing the density of this block with densities of similar blocks in China and New York. It then calibrated the block typology with the preferred block density.

Related Stories

Building Technology | Dec 20, 2018

Autodesk is spending $1.15 billion to acquire two construction tech providers

PlanGrid and BuildingConnected are the latest pieces in the company’s quest to digitize the construction industry.

Building Technology | Dec 18, 2018

Data and analytics are becoming essential for EC firms competing to rebuild America’s infrastructure

A new paper from Deloitte Consulting advises companies to revise their strategies with an eye toward leveraging advanced technologies.

Sponsored | BIM and Information Technology | Oct 15, 2018

3D scanning data provides solutions for challenging tilt-up panel casino project

At the top of the list of challenges for the Sandia project was that the building’s walls were being constructed entirely of tilt-up panels, complicating the ability to locate rebar in event future sleeves or penetrations would need to be created.

Sponsored | BIM and Information Technology | Oct 15, 2018

3D scanning data provides solutions for challenging tilt-up panel casino project

At the top of the list of challenges for the Sandia project was that the building’s walls were being constructed entirely of tilt-up panels, complicating the ability to locate rebar in event future sleeves or penetrations would need to be created.

BIM and Information Technology | Aug 16, 2018

Say 'Hello' to erudite machines

Machine learning represents a new frontier in the AEC industry that will help designers create buildings that are more efficient than ever before.

BIM and Information Technology | Aug 16, 2018

McKinsey: When it comes to AI adoption, construction should look to other industries for lessons

According to a McKinsey & Company report, only the travel and tourism and professional services sectors have a lower percentage of firms adopting one or more AI technologies at scale or in a core part of their business.

BIM and Information Technology | Jul 30, 2018

Artificial intelligence is not just hysteria

AI practitioners are primarily seeing very pointed benefits within problems that directly impact the bottom line.

AEC Tech | Jul 24, 2018

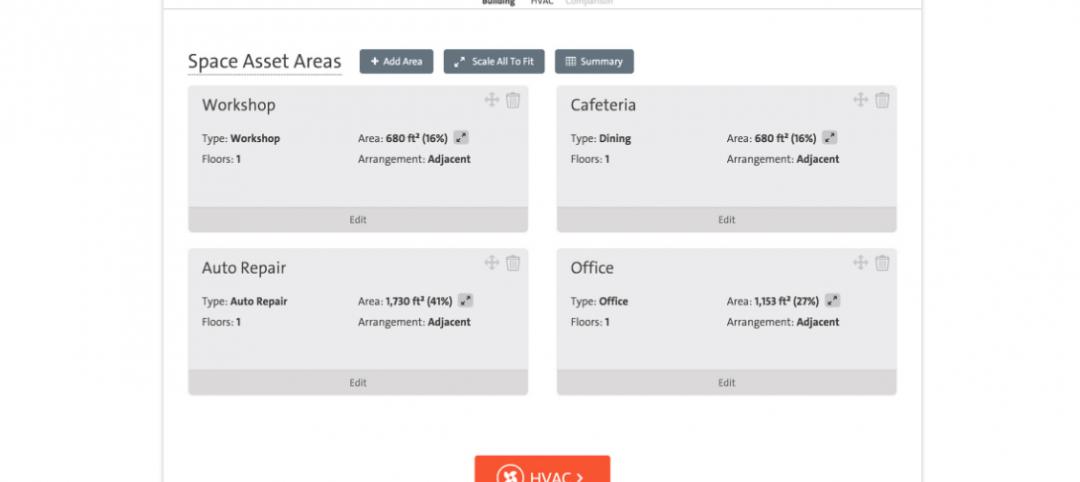

Weidt Group’s Net Energy Optimizer now available as software as a service

The proprietary energy analysis tool is open for use by the public.

Accelerate Live! | Jul 17, 2018

Call for speakers: Accelerate AEC! innovation conference, May 2019

This high-energy forum will deliver 20 game-changing business and technology innovations from the Giants of the AEC market.

BIM and Information Technology | Jul 9, 2018

Healthcare and the reality of artificial intelligence

Regardless of improved accuracy gains, caregivers may struggle with the idea of a computer logic qualifying decisions that have for decades relied heavily on instinct and medical intuition.